Hi, we're team Triple Squared and we are working on a project on everyday rich social communication. Our project, BEARHUG aims to enhance physical interactions for long distance couples using video chat by mimicking physical interaction through bear avatars that relay physical gestures. Check out our Heroku app or Github repo. You can also look at our user testing rounds (I) and (II) and see our final source files.

Why "Triple Squared?" We are a team of three: Emily Cheng, M.J Ma, and Sarah Sterman. Our initials are CC, MM, and SS (Emily's legal name is Cheng Cheng), thus C^2 + M^2 + S^2.

Project Manager

Loves art, backpacking, urban exploration, running, social dance. Huge outdoors enthusiast and Couchsurfing fan.

@resalire

Design Lead

A designer, an artist and a programmer. A dancer, a yogi and a climber. Loves life and always up for new adventures :)

@hmily

Evaluation Lead

Builds things, reads things, cooks things, sometimes all at the same time.

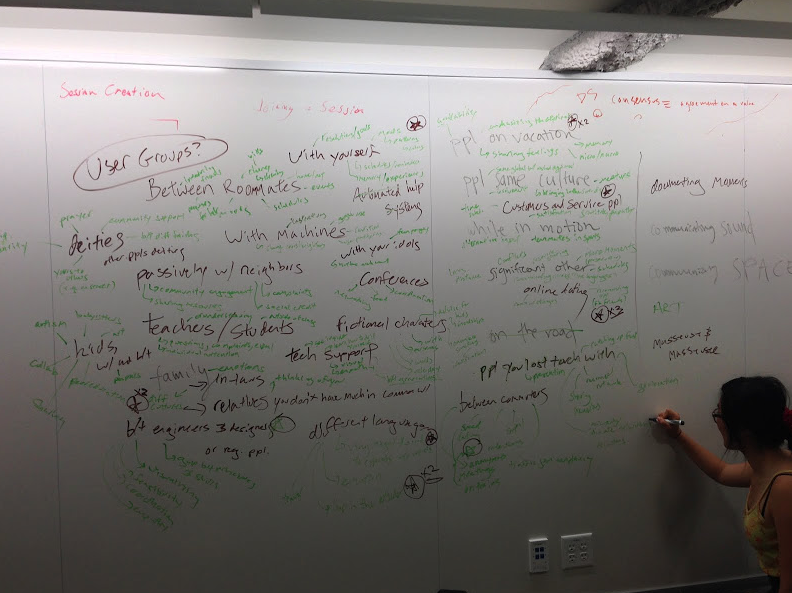

Ideation and Team Forming

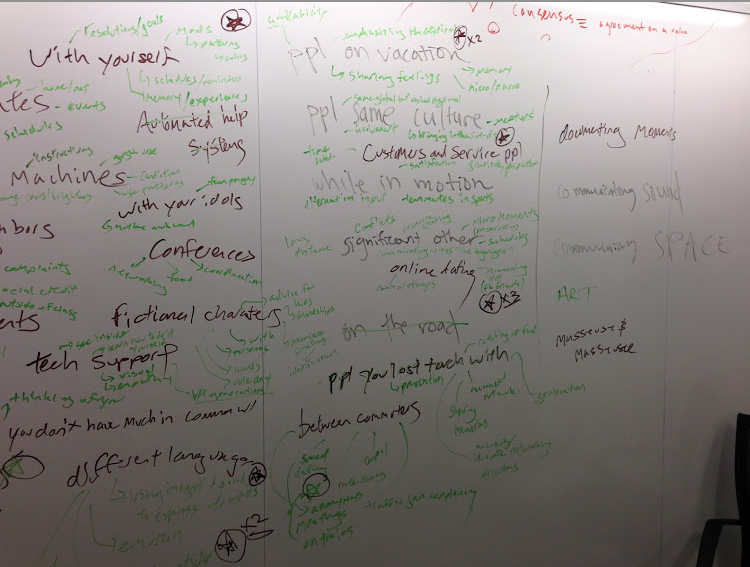

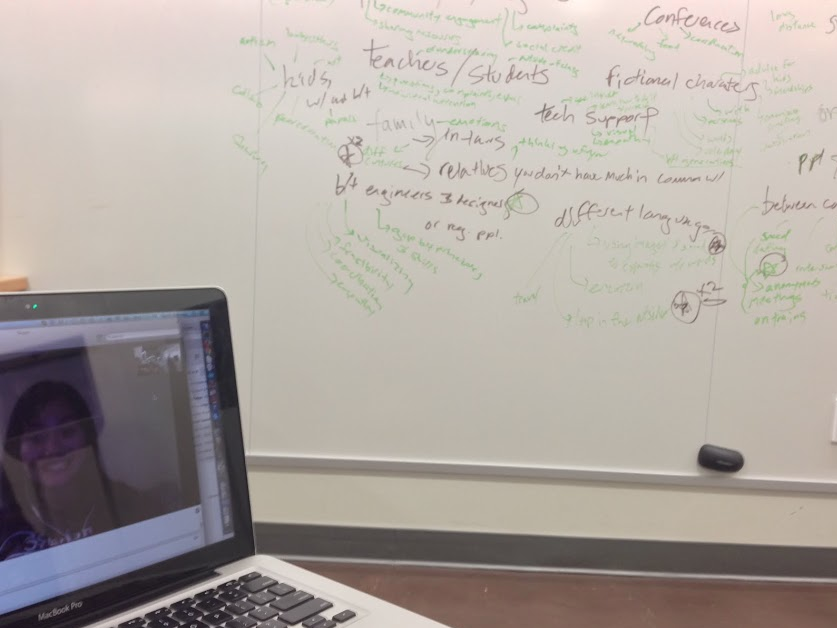

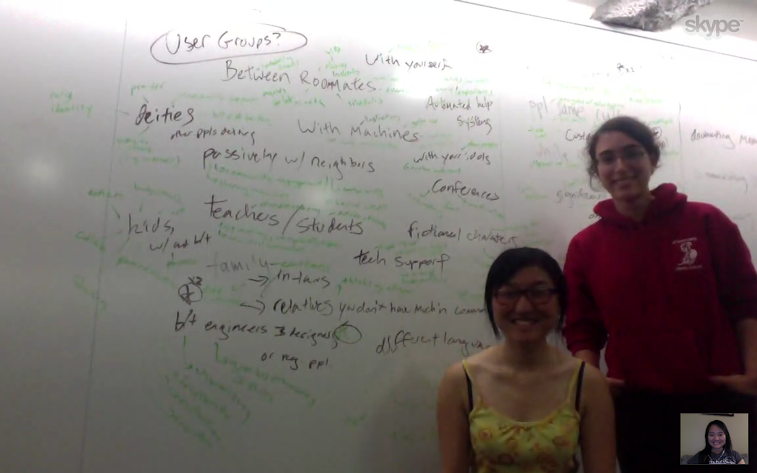

Our team brainstormed a wide range of ideas for communication. Our tactic was to first brainstorm different user groups and situations, then come up with communication needs for each category. We jotted all of our ideas on a whiteboard, and MJ even skyped in to join the conversation despite being in NY!

Photos from our brainstorming session:

Narrowing our ideas down to three, we have:

1) Everyday communication of little + authentic things

Communication Need: We share a lot of the big events in our lives, but don't have a way of feeling like we're a part of the daily gestalt or the small things. this is a way both to record the little good things for yourself, and to share them with friends and feel a part of their lives without the pressure that comes from seeing the kind of curated personalities you see on facebook, since you're documenting the details, not the big accomplishments.

Idea: I have a column on a webpage, and you have a column, and we post pictures and sound clips and videos and pieces of text from our days, and there's no context or differentiation except the column between us? Or even, you have a group of friends, and you can select names, and the inputs of the selected people just show up as a big collage of sights and sounds? So it emphasizes the little details in our lives, not the big important stuff that goes on facebook. And it's displayed all together with stuff our friends posted, so we feel more connected to all parts of their lives, not just text or deliberate pictures.

Target User: Friends or family members who are far away, especially people you don't talk to very often, or don't connect well with in conversation but who you still care about. Or on the flipside, for people who are very close to record and preserve authentic little moments in their lives (eg. significant other, parent-child).

Context: Input is done as you go about your daily life, whenever a little thing catches your eye. Reading it is done when you have free time you might be using to browse Facebook, for instance. This would involve immediate real-time communication, but also a way to create a log to preserve memories of these authentic moments.

2) Family and In-laws: communication between people of different cultures and customs

Communication Need: We are all influenced by the family we grow up in - from how we eat breakfast to our values and expectations. As people and families unite through relationships and marriage, the differences in our family traditions and backgrounds play an important role in forming this new unity and there is a pressing communication need for the differences.

Idea: The idea is to create an online platform where a user's family profile can be built through their answers to a series questions, and the profile can then be translated to another user's understand through a form of data visualization. The questions will be generated based on the users' relationships and will progress over time as the relationships progress. Besides the active questions, passive data can also be collected from the users' daily activities for analysis.

Target User: Couples in a relationship, in-laws.

Context: Couples can get to know each other's family backgrounds as their relationship progresses, and this will help them understand their differences and conflicts better. As a couple move further forward in their relationship and start prepping for marriage, the in-laws can also start to get to know each other on a deeper level.

3) Online dating: through personalized recommendations from online social networks

Communication Need: In real-life situations, especially in certain cultures, potential dates are directly introduced by friends or family, who play the "matchmaker" role, ie. communication of perceived compatability to two people. This is often successful because they know the two people personally and can perhaps better gauge their personalities and potential compatibility. Currently online dating doesn't match this real-world model because the people whose profiles you read on a dating site cannot be verified and they are far removed from your social network.

Idea: The idea is to create an app that users can use on a daily basis and in their free time to play "matchmaker" between friends on an online social network (ie. Facebook API), benefit being that social networks are much more vast than real life friend groups and thus there is more reach. Perhaps similar to a concept of setting up blind dates, the user either creates pairings or is presented with pairings possibilities that they identify as being potentially compatible or not based on their real life knowledge of the two people's personalities and preferences. The app would be constructed to allow users to do this on a daily basis as a casual, fun activity, similar to how people use Tinder and swipe through profiles on a multiple-times-per-day basis.

Target User: Young adults to mid-aged adults who have a sizable Facebook network, either single, or have many single friends.

Context: As mentioned before, users would use this app to create pairings on a daily basis as a casual, fun activity, similar to how people use Tinder and swipe through profiles on a multiple-times-per-day basis. Low stakes enviornment that engages the user whenever they are bored and have down time.

Wizard of Oz

See our WoZ slide deck PDF Link We did WoZ testing on 2 prototypes: 1) Prototype 1: BluhBluh (BB): A web browser extension / bookmarklet that allows a user to easily share and discuss web pages with rich media: video and audio. 2) Prototype 2: GlowyTouch: A video chat for long distance couples to communicate physical touch and physical connection by using gestures that create glowing red spots on

the user’s face and

body, simulating touch.

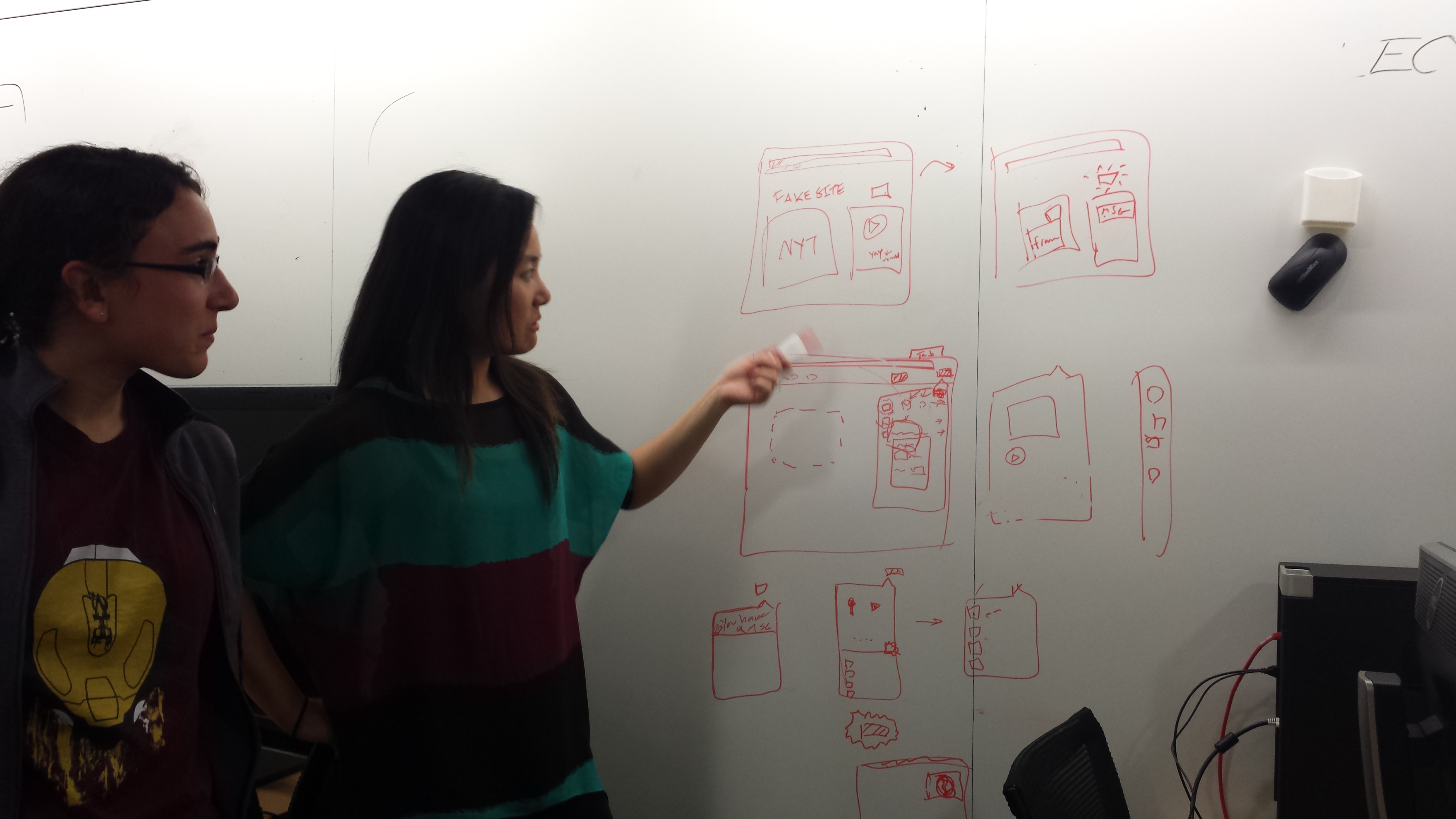

Team Triple Squared dedicated a lot of time to coding up hybrid functional prototypes in conjunction with WoZ techniques. Here we are brainstorming before our group coding session: Then we did WoZ testing on 3 users each per prototype. Alternative to the PDF link, see our gdocs slide deck online HERE

Functional Prototype

Try our prototype: BEARHUG: Enhancing Physical Interactions in Video Chat. BEARHUG aims to enhance physical interactions for long distance couples in video chat by mimicking physical interaction through bear avatars that relay physical gestures - they can kiss, hug, and more. All bear animations were custom created by our team. To try our app, open up the link on 2 different computers to start chatting. Our app may not work in all browsers due to our use of WebRTC to enable browser video chat. See "Implementation" below for details. You can also check out our Github repository Overview

We had a valuable Google Hangout chat with our fantastic CS247 mentor, Paul, who suggested the idea of mediation to make things more fluent; direct interaction as we had tried to achieve through GlowyTouch is a 'hot' interaction that could cause awkwardness or anxiety, as it is revealing. He suggested instead that we could create an app where there is something mediating the interaction or a level of indirection, such as using avatars. And thus BEARHUG was born: a video chat app that allows for physical interaction through bear avatars that can kiss, hug, and more.

Current Features

1. The two video chat windows are of equal size, and placed directly next to each other, taking up nearly the entire window. By doing so, we hope to create a sense of being close to your video chat partner.2. Each person has a personal avatar. Via button clicks, these avatars can execute certain gestures with each other, such as hugging, kissing, and patting. By having the avatars interact, rather than the people directly, we can have interaction by proxy. It also allows more recognizeable interactions than the glow in the previous iteration, since the avatars are vaguely humanoid and can show actions as a person would.

3. The avatars loiter within the frame of their person's video screen, but can cross between video screens without respect for boundaries when executing gestures. This physically disrupts the division of two video screens and real life distance, hopefully giving a sense of connection and closeness to the participants. At the same time, the fact that the avatars must travel across the screen to reach the other and give it a hug or kiss is symbolic of the distance that is crossed to achieve this simulated physical interaciton, thus feeling authentic.

Implementation

We have implemented a basic live video chat system, and basic avatar gestures and controls. Avatar control signals are sent via Firebase, allowing actions on one side of the video chat to be mirrored on the other. Video chat is supported by WebRTC. WebRTC is the only viable option for video that fulfills our design needs (Google Hangouts has too many restrictions on design), however it is poorly implemented across browsers. Thus we run into some unavoidable issues of functionality between computers that we will not be able to fix. This raises questions about the future viability of the app, which we will need to discuss. We have developed basic animations for the avatars, that will be a good framework for future gesture sets and possible avatar customization. All of these animations were custom created by our team, not just simply taken from the internet. Thus we are confident in our ability to create more custom, unique animations to fulfill the needs of our app.

Remaining Issues

1. Adding mouse event/swipe event controls of the animation: for instance, we could use webcam video motion / edge detection to allow the user to swipe their hands to nudge the bears and interact with the bears. Or we could use the mouse to drag the bears across the screens and more directly simulate bear-to-bear interaction. This is most important because the motion involves the user more fully, especially on touch screens.2. Functionality for creation of specific video chat rooms with unique links for each couple. Currently anyone using our app would end up joining the same video chat. This should be easy to implement as it was done in the starter code of P3 Emoticons, but we wanted to focus on the avatar interaction functionality for our intiial prototype, as it is the most important feature.

3. Allow animations to interact with you outside of the predefined band, for instance, allowing it to sit on a user's shoulder in the video chat, or even pat a user's head. This would allow for interaction between avatar and person, rather than just avatar-avatar, which adds a new, interesting dimension to explore.

4. Customization of avatars and more interaction options.

5. Explore gesture based animation control. This may not be possible, but would be the ideal interaction with the avatar. If you make a hug gesture, then the avatar also makes a hug gesture. If you move your face toward the screen for a kiss, the bears will kiss.

We are excited to further improve our prototype!

User Testing

See our User Testing Page where we describe our user testing flow and results for our BEARHUG intial functional prototype: Introduction, Methods, Results, Discussion, and Implications. You can also check out our User Testing presentation slide deck.

Functional Prototype II

See our Functional Prototype II implementation updates page. Check out our prototype II BearHug herokuapp HERE!

User Testing II

Our user testing consent form can be found HERE

You can also check out our User Testing presentation slide deck. Try our updated and enhanced prototype: BEARHUG: Enhancing Physical Interactions in Video Chat.

Final Presentations

Link to BEARHUG Heroku app - 45 second video - 2 slide deck Link to our source files zip What couples are saying about BearHug:

"I feel sentimental and loved when I receive a kiss or hug from my partner"

"BearHug makes videochatting fun"

"I feel closer to my boyfriend"

"During the video chat, I liked that I could directly kiss my boyfriend's face with my bear"

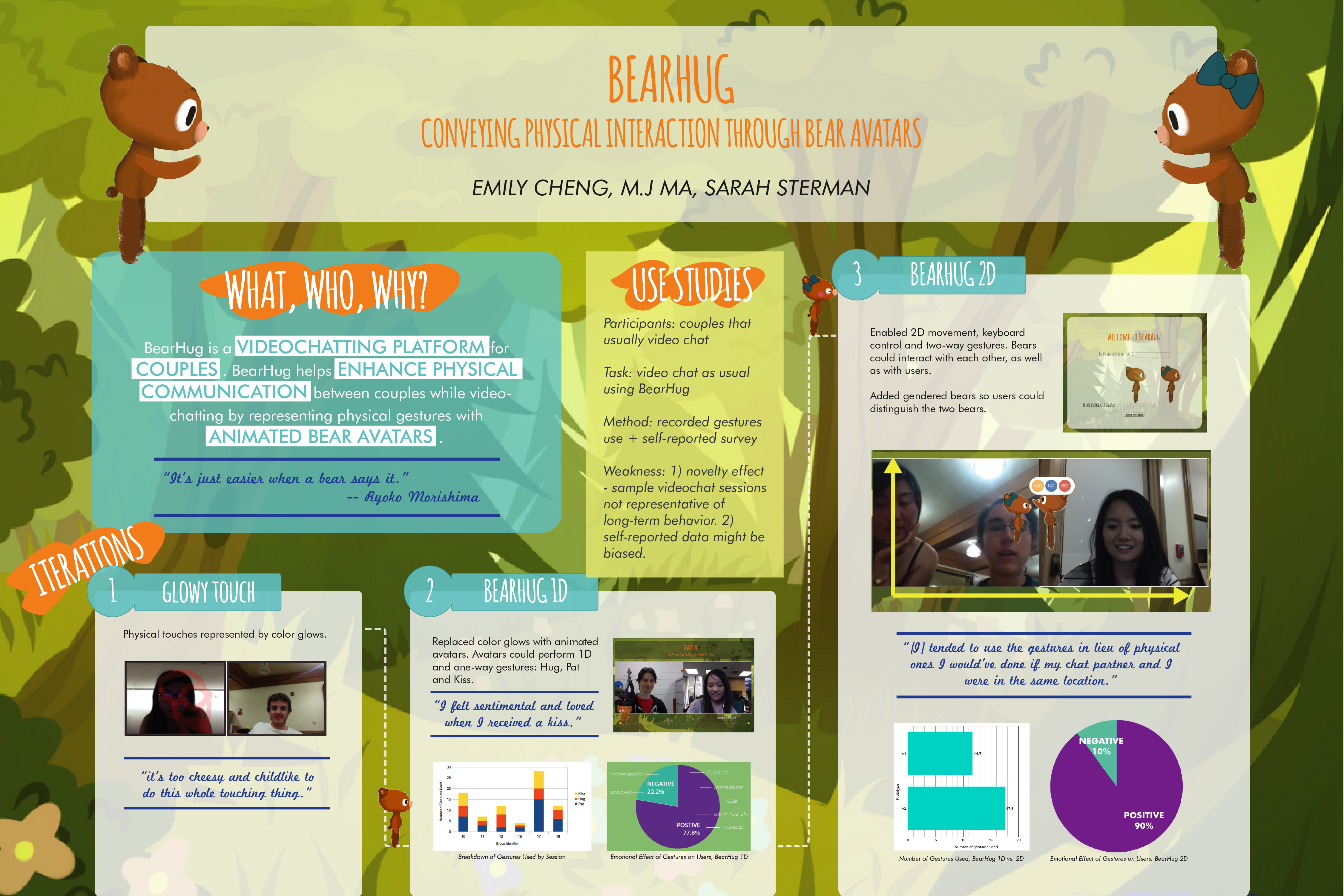

Project poster: